Best AI tools for Detecting Dishonest Responses during Online Interviews

Explore the best AI tools like Sherlock and others that detect dishonest or AI-generated responses during live video interviews and prevent fraud.

The hiring process has quietly become one of the biggest victims of AI misuse.

Candidates now walk into interviews - or rather, video calls - with ChatGPT windows open, whispering prompts into hidden earbuds, or using deepfake overlays that mimic someone else’s face or voice.

What used to be a conversation about skills is now a cat-and-mouse game of truth vs. technology.

Recruiters know something is off. Candidates sound too fluent. Answers are too polished. There’s a pause before every response - almost as if a model is thinking, not a person.

This is the new hiring reality. And to restore trust, a new generation of AI integrity tools is emerging - built to detect dishonest responses, proxy participants, and AI-generated behavior in real time.

The Complication

Traditional proctoring tools were built for exams, not interviews.

They detect if someone left the tab - not if someone else is speaking on behalf of the candidate.

What makes dishonest responses in interviews particularly hard to detect is:

- Multi-modal deception - Candidates can fake not just answers, but tone, facial expressions, and even voice.

- Subtle AI assistance - People use earphones connected to another person or real-time GPT whispering tools.

- Deepfake overlays - Video masking tools can swap faces, making identity checks unreliable.

- Scripted responses - AI tools generate perfect yet context-misaligned answers that recruiters often mistake for competence.

These aren’t theoretical problems anymore. They’re showing up in day-to-day interviews across industries, especially in high-value remote hiring.

AI Tools Fighting Dishonesty in Interviews

Here are some of the most promising AI tools that detect dishonest responses during video interviews - each approaching the problem differently.

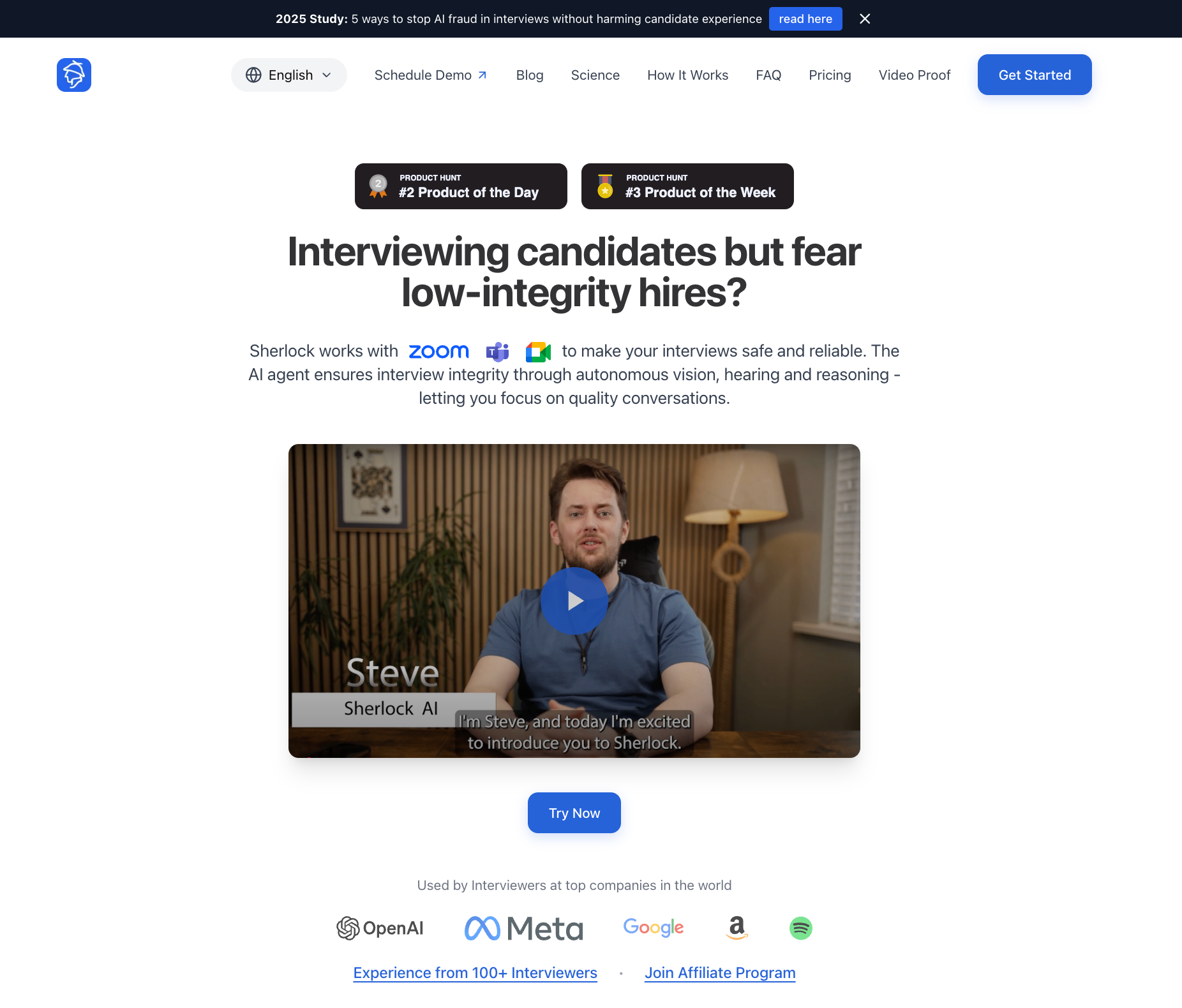

1. Sherlock

Best for: Hiring Managers and Recruiting teams who want an enterprise-grade, Forensic-grade AI proctor inside live interviews.

How it works:

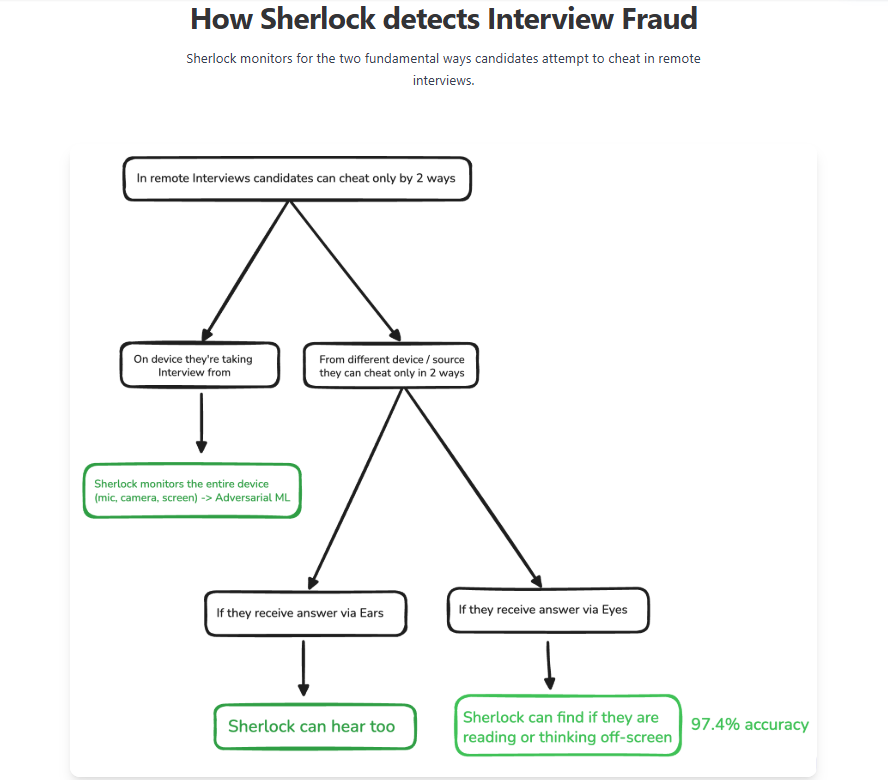

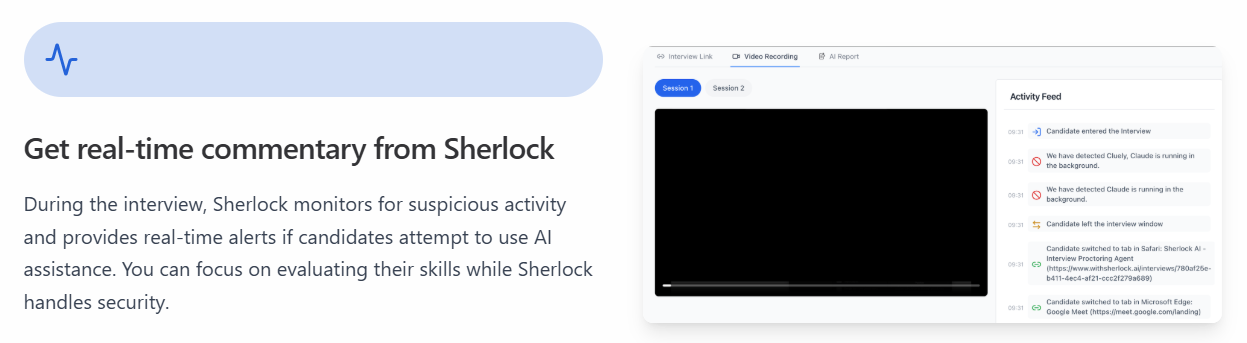

Sherlock joins your Zoom, Teams, or Google Meet as an invisible AI agent. It watches for suspicious behavior - like mismatched lip movements, multiple faces, tab switches, whispering, or unusual speech latency - and flags potential fraud or AI-assisted responses.

It uses multi-modal analysis (video, audio, transcript) and builds behavioral signatures for every candidate.

Why it stands out:

Sherlock doesn’t just detect anomalies; it learns what honesty looks like for each user. It also auto-summarizes interviews and creates a “trust score” alongside the skill score.

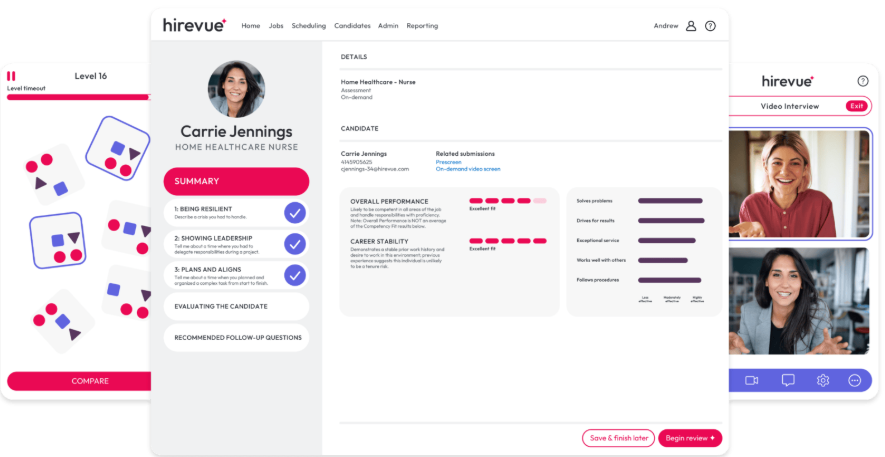

Best for: Structured AI interviews and large-scale screening.

How it works:

HireVue uses AI to analyze verbal and non-verbal cues in candidate responses. It looks at tone, sentiment, pauses, and gaze direction to infer authenticity and engagement.

Limitation:

While powerful for structured one-way interviews, it’s less effective for real-time proxy fraud or deepfake detection.

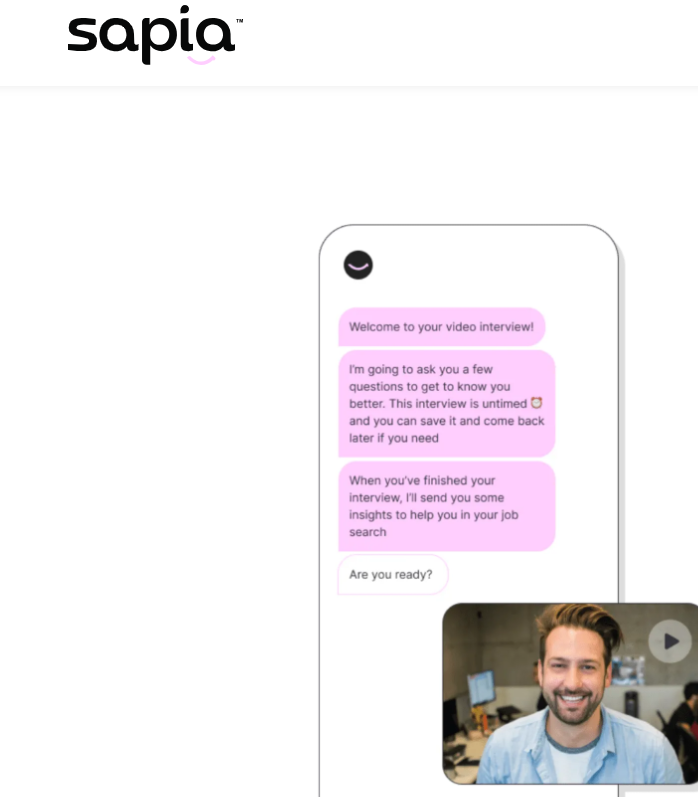

Best for: Chat-based honesty detection.

How it works:

Sapia uses linguistic and psycholinguistic AI to detect unnatural writing patterns and AI-generated text in chat interviews.

It can spot over-optimized or “machine-styled” phrasing patterns that betray ChatGPT-type responses.

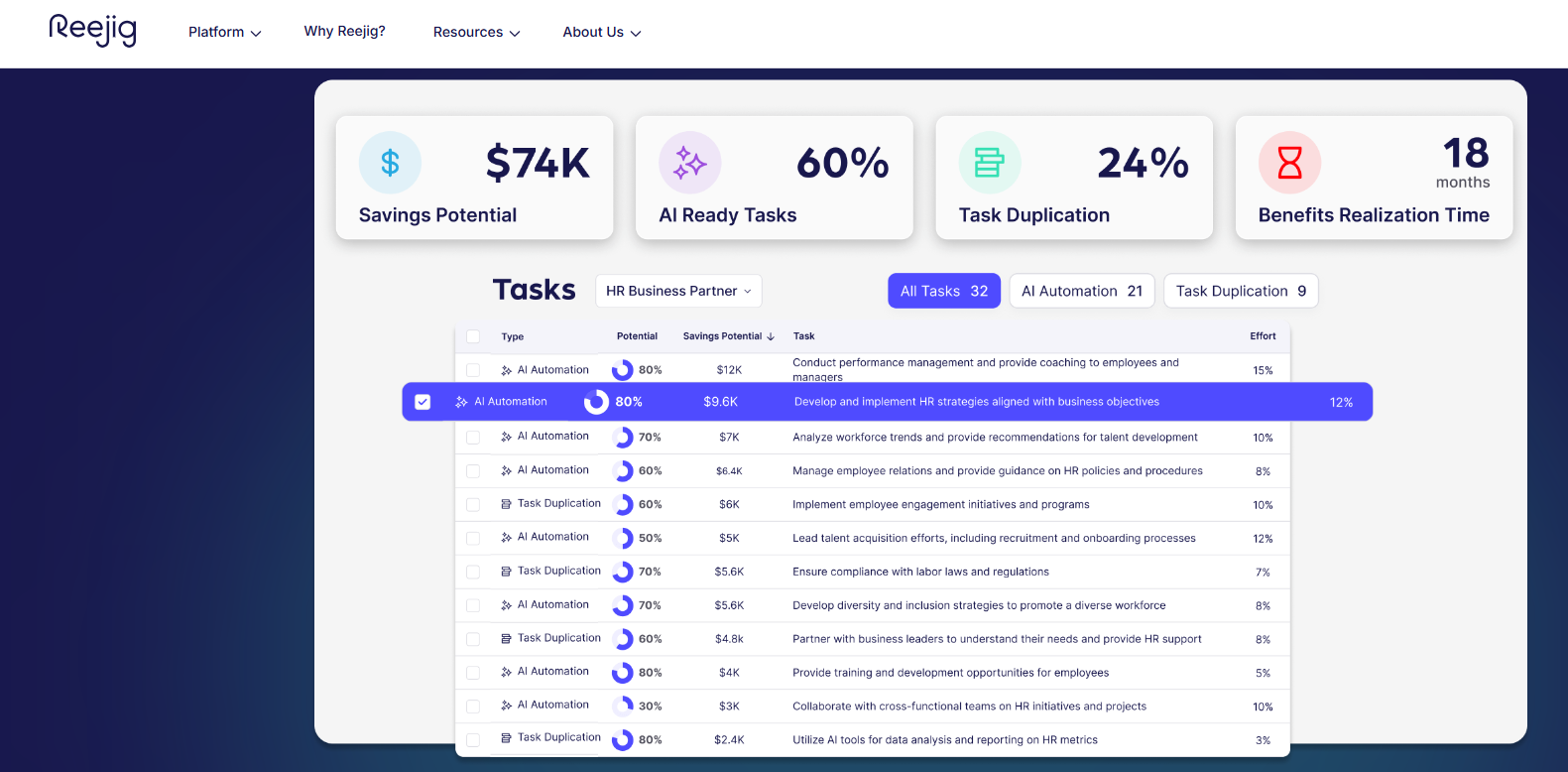

4. Reejig

Best for: Ethical AI scoring with fairness focus.

How it works:

Reejig builds ethical, auditable AI systems that help organizations identify skill patterns while ensuring bias-free evaluation. While not a fraud-detection tool per se, its explainable AI framework adds a trust layer to assessment analytics.

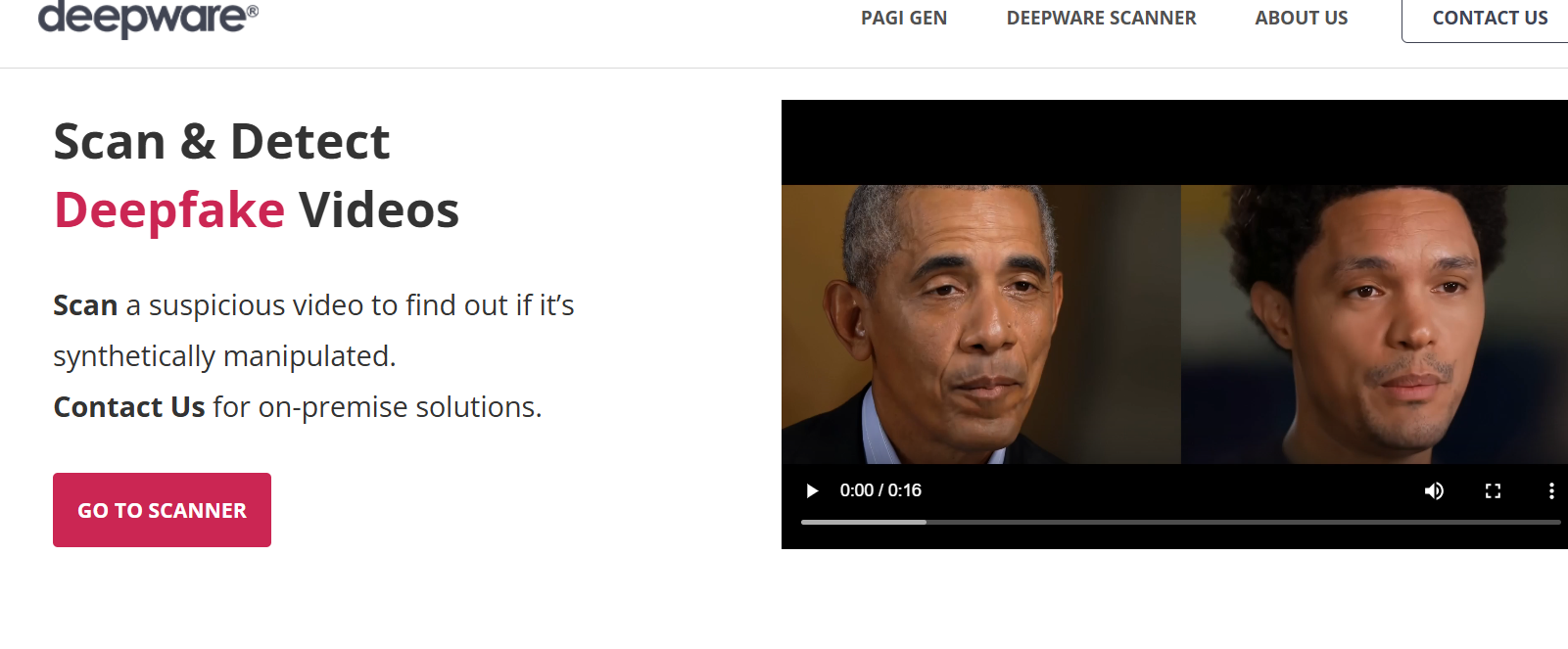

5. Deepware / Reality Defender

Best for: Detecting deepfakes and face-swaps.

How it works:

These tools scan live or recorded video streams for manipulation artifacts - pixel-level inconsistencies, temporal warping, and synthetic noise that indicate tampering.

They’re becoming a vital part of background verification and identity assurance stacks.

Best for: Identity verification in the hiring process.

How it works:

While not built for “dishonest response” detection, this tool ensures that the person interviewed is the same person whose credentials were submitted — closing the loop on identity fraud before interviews even begin.

The New Standard of Interview Integrity

The future of interviews isn’t about catching cheaters - it’s about re-establishing trust in conversations between humans.

AI will soon be present on both sides of the table - candidates using it to assist, companies using it to detect and interpret.

The right path isn’t paranoia; it’s precision.

By layering AI tools like Sherlock into your workflow, you can separate human honesty from machine fluency, ensuring that interviews remain what they were meant to be - a truthful exchange of competence, not computation.

TL;DR Summary

| Problem | Old Approach | AI Solution |

|---|---|---|

| Candidates using ChatGPT or proxies | Manual observation | Sherlock – AI agent in interviews |

| Deepfake overlays | Visual verification | Deepware / Reality Defender |

| Scripted AI responses | Text pattern detection | Sapia.ai |

| Identity mismatch | ID check post-submission | AuthBridge / IDfy |

| Unnatural pauses, voice inconsistency | None | HireVue / Sherlock |

In the age of AI, trust will become the ultimate hiring currency.

The companies that adopt AI integrity layers early won’t just prevent fraud — they’ll hire more confidently, scale faster, and protect the one thing that can’t be automated: human authenticity.